Reinforcement Learning for Energy Efficient Control

At Cambridge Zero’s recent Research Symposia, Refficiency’s Scott spoke about the use of a new reinforcement learning technique for effective temperature regulation and reduction of GHG emissions.

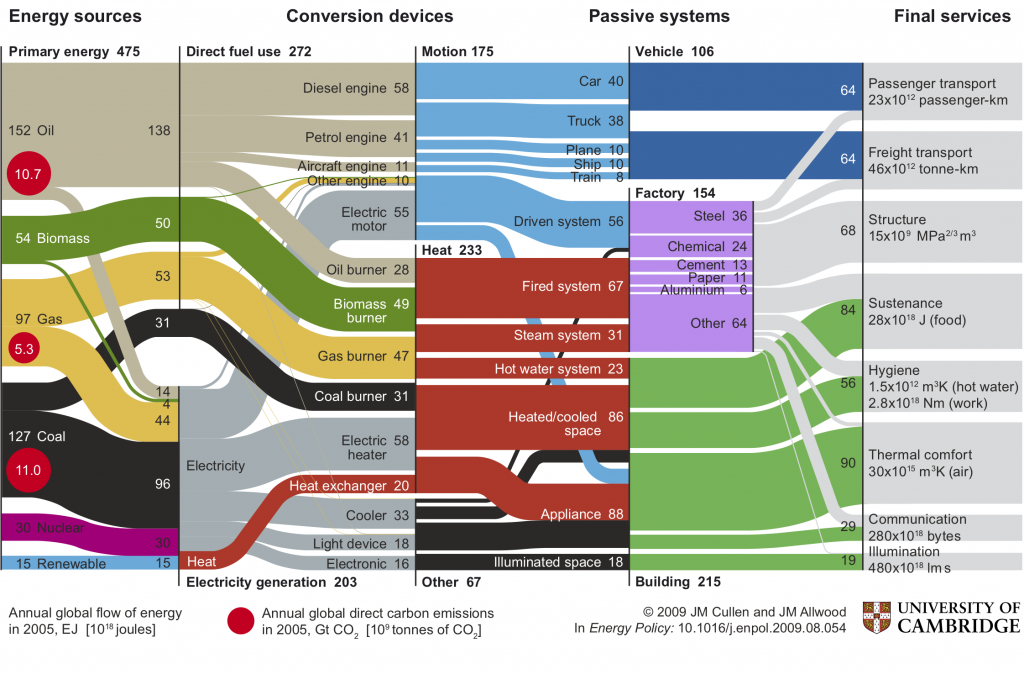

When looking at Cullen and Allwood’s 2009 Sankey diagram, we can see that the primary source of energy consumption globally is in regulating temperature – approximately 50% of total energy demand. If we can produce ways of heating and cooling society more efficiently and using less energy, this would be an effective climate change mitigation tool.

Source: https://www.sciencedirect.com/science/article/

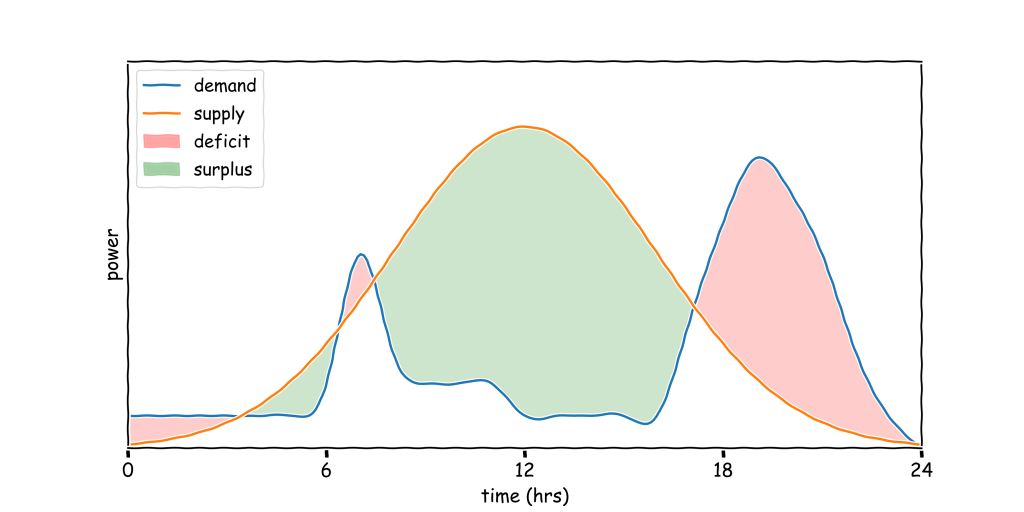

Hypothetically, for a home with solar panels, there are periods in the day where there is a mismatch in renewable energy supplied and the occupant’s demand for energy. This means there are surpluses and deficits, where the solar power is either providing too much or too little power at certain times in the day. We have issues when we have a deficit of energy, as this is when the national grid will spin up gas-powered power station to provide the energy required to fill this deficit. This creates emissions that could’ve been avoided had we better shifted our renewable energy supply in the day to other times where we needed it.

Source: https://enjeeneer.io/

The problem is that many current heating systems are regulated by Rule-based Controllers (RBCs), which are very rudimentary systems. Either the heaters or coolers will come on as the temperature fluctuates above and below the set temperature. These don’t maximise energy efficiency, nor do they interact with the grid to draw power when grid carbon intensity is lowest to minimise GHG emissions. And so, we need new approaches to do this, to which Scott proposes reinforcement learning as a useful method of controlling these systems.

Two examples of reinforcement learning are Model Free and Model Based Reinforcement Learning. Model Free Reinforcement Learning often requires millions of timesteps of data, that we can only feed to the agent using a simulator. In a case where we want to control every building in the world, we would need to build a simulator for every building in the world which is not a scalable solution. Model Free Reinforcement Learning does, however, offer better performance, but it comes at the cost of data inefficiency. Model Based Reinforcement Learning is more data efficient, and some literature shows they can produce building control policies after only a few hours of interaction with the environment. This data efficiency does come at the cost of performance.

Consequently, we would like new approaches which combine the best of both worlds: high energy efficiency improvements but with high data efficiency. Scott proposes this is possible using Probabilistic Model Based Reinforcement Learning.

Scott has tested the efficacy of this technique using building energy simulations, where his agent is placed alongside the existing state of the art agents in a simulated environment with the goal of minimising emissions over the course of one year whilst maintaining thermal comfort in the building with no prior knowledge. His approach has proved to minimise GHG emissions in 2 out of the 3 buildings (a mixed-use building, an apartment, and a seminar centre all in different climates), done whilst maintaining the temperature better than any of the other approaches. In the mixed-use building simulation, 30% fewer emissions were produced than the current rule-based controller and 10% fewer in the simulated apartment building. Scott’s proposed approach is also easily deployed, requires no prior knowledge, and can obtain emission-reducing control policies after only a few hours of interaction with the real environment.

Watch the whole talk from Scott on Cambridge Zero’s YouTube channel below

Read more about Scott’s presentation on his blog

Photo credit: Gritt Zheng